Note: This article is a bit technical, and will be most helpful for developers involved in solution design.

When a SuiteScript goes beyond a basic get-and-set, it is important to devote time to solution design. In some of my past tasks, solution design took up most of my time, while writing the code was a much smaller fraction.

If you have to write an algorithm in your code, you will want that algorithm to be as efficient as possible. A quick way of measuring algorithm efficiency is with Big-O notation. It is the best rough indicator of how efficiently an algorithm will run.

What is Big-O notation?

Big-O provides a rough timeframe of how long an algorithm will take given a dataset of a certain size. Big-O is written out with an O, with some basic formula in parentheses using N, such as O(log(n)). The N represents the size of the data, and the O is a ceiling for how long it will take to process the data.

Let's look at some charts.

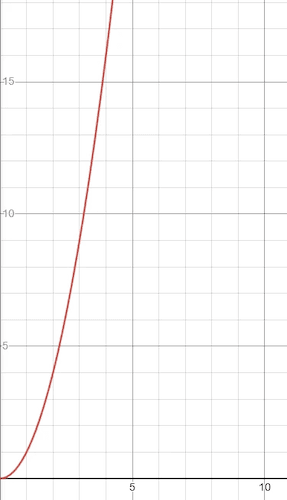

O(n^2) Visualization

Below is a chart representing O(n^2).

This is the same as the chart for y=x^2. The x-axis represents N, the size of the data, while the line is a rough estimate of how much time it will take for the algorithm to process all the data. As the data size N increases, the time to process increases rather rapidly.

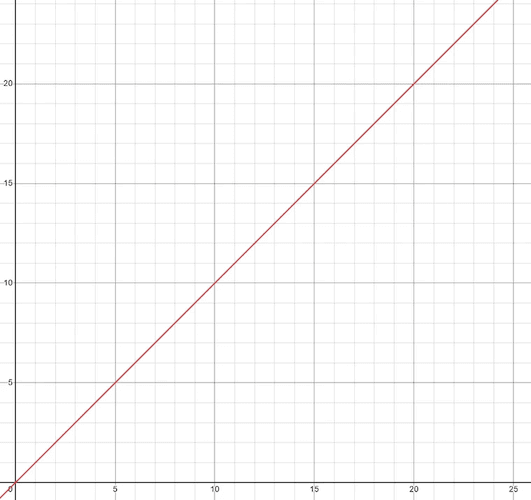

O(n) Visualization

This is a chart for O(n). As the data size increases, the estimated time increases linearly with the data size. This is considered a relatively good result for an algorithm.

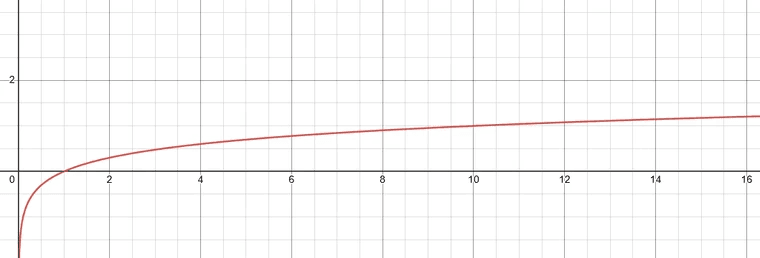

O(log(n)) Visualization

This is a chart for O(log(n)). As the data size increases, the time to process does not go up so much. In fact, the rate at which the time increases will slow down as the data size increases. This is a rare feat, only really achieved by divide-and-conquer algorithms. You probably will not run into an O(log(n)) algorithm working in NetSuite, but if you do come up with an O(log(n)) algorithm for a NetSuite problem, the achievement should be commended.

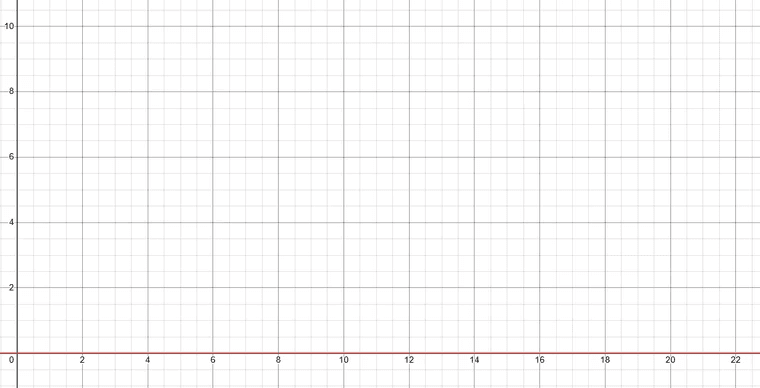

O(1) Visualization

Finally, this is a chart for O(1). As the data size increases, the time to process the data does not change. A basic get-and-set algorithm would follow O(1).

Simplifying Big-O Notation

Big-O notation does not use coefficients, as it is used primarily to measure the rough time efficiency of an algorithm. For example, an algorithm that follows O(2n) would be simplified to O(n).

When multiple algorithms have to be put together, the file as a whole would be simplified from potentially O(n) + O(n^2) to O(n^2) because O(n^2) is the least time-efficient part of the algorithm.

Measuring Big-O in Code: Examples

Let us look at some examples of measuring Big-O in code.

O(1) Example

Here is an example of a basic get-and-set:

customerRecord.setValue({

fieldId: "custentity_special_email",

value: customerRecord.getValue("email")

});I would not consider this an algorithm, but for the sake of example, let us consider it as such. We could consider data size as the length of the "email" field's value, but running this get-and-set should use the same amount of time regardless of the length. This algorithm would be considered O(1).

O(n) Example

customerSearch.run().each(function(result) {

// do some processes

});This search is processing over a set of results from a search. There is no nesting of more searches within that search, so this would be considered O(n), with the N representing the number of results from the search. Now, if the search were this:

customerSearch.run().each(function(result) {

// do some processes

// do some more processes

});This would still be considered O(n). Remember that Big-O notation does not consider coefficients. This may take longer than the previous example, but what Big-O will tell you is that the time to process each result increases linearly.

O(n^2) Example

Now let us consider this example:

customerSearch.run().each(function(result) {

customerSearch.run().each(function(result2) {

// do some processes

});

});For each customer result, the code runs the customer search again and is processing over each entry. This would be considered O(n^2), because the time to process each result increases like a parabola. If this is the best possible algorithm time-efficiency-wise that could be conjured up, so be it, but consider separating the nested for-loops like this:

customerSearch.run().each(function(result) {

/ do some processes

});

customerSearch.run().each(function(result) {

/ do some more processes

});While this might take up more lines of code and more memory, the two separate customer searches with no nested for-loops would be O(n) + O(n), simplified down to O(n). The time for this algorithm to run now only increases linearly! This is a great improvement over an algorithm running in O(n^2) time.

List of O(n) Times by Efficiency

Here is a ranking of various O(n) times, from most efficient to least efficient:

- O(1)

- O(log(n))

- O(n)

- O(n(log(n)))

- O(n^2)

- O(2^n)

- O(n!)

Using Big-O Notation in NetSuite Development

Knowledge of Big-O notation is important for NetSuite development. It gives a rough estimate of how much time or governance a script might use and whether an algorithm might need to be offloaded from a User Event Script to maybe a Map/Reduce script. It is not uncommon for NetSuite scripts to run over tens of thousands of records, even if you only expected the script to run over a couple.

Generally, for User Event Scripts, try to keep algorithms at O(1) or O(n). If an algorithm worse than O(n^2) is needed, or you think the script will use a large amount of governance even with smaller datasets, consider offloading the algorithm to a Map/Reduce script or a scheduled script.

Get stuck in a step during this article?

We like to update our blogs and articles to make sure they help resolve any troubleshooting difficulties you are having. Sometimes, there is a related feature to enable or a field to fill out that we miss during the instructions. If this article didn't resolve the issue, please use the chat and let us know so that we can update this article!

Tagged with Training