Robots.txt files are crucial when it comes to your website's SEO. These files allow you to tell search engine bots like Google how to crawl your website, whether to index certain pages or not, and which urls are canonical.

Your NetSuite website has a very basic robots.txt file by default, you can view its contents by adding /robots.txt to the end of your website domain. This file will remain in use unless you create one yourself and add it to your site. We recommend you do create your own robots.txt file because the default file is very basic and cannot be edited.

Prerequisites

We recommend you generate a sitemap so that you can include it in your robots.txt file. To generate your sitemap, go to COMMERCE > MARKETING > SITEMAP GENERATOR.

Creating a robots.txt file

To create your robots.txt file, open a plain text editor on your computer such as Notepad.

Things to Include in Your robots.txt File

Sitemap: You should include your sitemap in your robots.txt file so that google can easily crawl the urls on your website and tell if there are urls that are canonical to other urls.

Crawl-delay: This tells bots how frequently they can request new pages from your site. Common values are 10 or 20; the number you list here is in seconds.

User-agent: This is the bot that you want the directives in your file to apply to. You could, for example, specify Googlebot, however, it is very common to simply use * as this symbol indicates that your directives apply to all bots.

Disallow/Allow: This directive allows you to tell bots to crawl or not to crawl specific areas of you site. This directive is very useful in preventing duplicate content on your site as a result of elements like item facets. For example, if you wanted bots not to crawl the item facet, Red, you would enter Disallow: /Red/

Noindex: this directive indicates that you do not want your page or site to appear in search results. This directive is commonly used for staging domains or for the production domain before the site has gone live; in both of these instances, you would not want your site to be appearing in search results, so a noindex is advisable.

Here is an example of a basic robots.txt file.

Sitemap: urlofyoursitemap

Crawl-delay = 20

User-agent: *

Disallow: /

Noindex: /

This website is still in pre-go live so the robots file is telling bots not to crawl or index the entire website. The sitemap is listed at the top of the file so that google knows how to crawl your site. The crawl delay is set to 20 seconds and the user agent is set to * which indicates the directives apply to all bots. The disallow and noindex directives are set to / which means the entire site cannot be crawled or indexed. If disallow or noindex directives are not specified, crawl and index is the default.

Save your plain text file, name it robots.

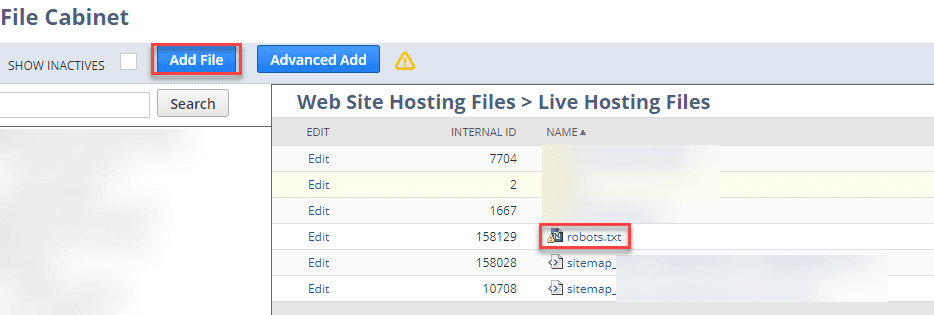

Upload your robots.txt file to NetSuite.

To apply your robots.txt file to your website, upload it to the live hosting files of your NetSuite File Cabinet. Navigate to DOCUMENTS > FILES > FILE CABINET > WEBSITE HOSTING FILES > LIVE HOSTING FILES. Upload your robots.txt file into the Live Hosting Files, do not put it in any folders in the Live Hosting Files, it should be in the same location as your sitemap files.

To verify that your file is being used and not the default robots.txt file, add /robots.txt to the end of your site's domain. Again, there is no file in the File Cabinet that acts as the default robots.txt file so you cannot edit it, once you upload your own file to the live hosting files, the default file will be replaced.

Related Article: SuiteCommerce File Folder Tips and Best Practices

NetSuite Commerce Partner

That's all for now, but we hope that this article was helpful and informative! If you have general questions about SuiteCommerce, or specific questions about SuiteCommerce, feel free to contact our team at any time. Anchor Group is a certified Oracle NetSuite Commerce Partner, and is equipped to handle all kinds of SuiteCommerce projects, large or small!

We are a premium SuiteCommerce agency that creates powerful customer portals. Unlike our competitors, we have already solved your problems.

FREE SuiteCommerce Book

If you liked this article, you'll LOVE our book on SuiteCommerce! So, what are you waiting for?

Order the free SuiteCommerce book today, and we'll even pay for shipping!

Tagged with Training, Troubleshooting